Difference between revisions of "Nvidia Profile Inspector"

m (combined NvGames.dll references) |

m (Replaced dead link with Wayback Machine link.) |

||

| (151 intermediate revisions by 36 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{ | + | {{Infobox software |

| − | [ | + | |cover = Nvidia Profile Inspector.png |

| − | {{ | + | |developers = |

| − | | | + | {{Infobox game/row/developer|Orbmu2k}} |

| − | | | + | |release dates= |

| + | {{Infobox game/row/date|Windows|June 20, 2010|ref=<ref>{{Refcheck|user=Aemony|date=2020-07-24|comment=The [https://web.archive.org/web/20100629152945/http://blog.orbmu2k.de:80/tools/nvidia-inspector-tool original website] dates v1.90 as having been released on June 20, 2010. This seems to have been the version that brought along with it the ability to tweak game/driver profile settings. The main Nvidia Profile tool itself was publicly released on April 24, 2010.}}</ref>}} | ||

| + | |winehq = | ||

| + | |wikipedia = | ||

| + | |license = freeware | ||

| + | }} | ||

| + | {{Tocbox}} | ||

| + | |||

| + | {{Introduction | ||

| + | |introduction = '''''Nvidia Profile Inspector''''' (NPI) is an open source third-party tool created for pulling up and editing application profiles within [[Nvidia (GPU)|the Nvidia display drivers]]. It works much like the '''Manage 3D settings''' page in the [[Nvidia Control Panel]] but goes more in-depth and exposes settings and offers functionality not available through the native control panel. | ||

| + | |||

| + | |release history = The tool was spun out of the original [http://www.guru3d.com/files-details/nvidia-inspector-download.html Nvidia Inspector] which featured an overclocking utility and required the user to create a shortcut to launch the profile editor separately. | ||

| + | |||

| + | |current state = ''Nvidia Profile Inspector'' typically sees maintenance updates released every couple of months, keeping it aligned with changes introduced in newer versions of the drivers, although the project were seemingly on a hiatus between January 19, 2021 and November 13, 2022. An unofficial fork was released in April 2022 with improved support for newer drivers and now no longer available since an official update from the original author. | ||

}} | }} | ||

| − | ''' | + | '''General information''' |

| − | {{ | + | {{mm}} [[List of HBAO+ compatibility flags for Nvidia|HBAO+ Compatibility Flags]] |

| − | {{ | + | {{mm}} [[List of anti-aliasing compatibility flags for Nvidia|Anti-Aliasing Compatibility Flags]] |

| − | {{ | + | {{mm}} [https://www.forum-3dcenter.org/vbulletin/showthread.php?t=509912 SLI Compatibility Flags] (German) |

| − | {{ | + | {{mm}} [https://github.com/Orbmu2k/nvidiaProfileInspector Official repository] |

| − | {{ii}}[https:// | + | |

| + | '''Related articles''' | ||

| + | {{ii}} [[Nvidia]] | ||

| + | {{ii}} [[Nvidia Control Panel]] | ||

| + | |||

| + | ==Availability== | ||

| + | {{Availability| | ||

| + | {{Availability/row|1= Official |2= https://github.com/Orbmu2k/nvidiaProfileInspector/releases |3= DRM-Free |4= |5= |6= Windows }} | ||

| + | }} | ||

| − | == | + | ==Installation== |

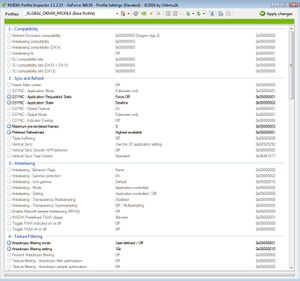

| − | + | {{Image|NPI2016.jpg|Main window of the global/base profile.}} | |

| − | |||

| − | |||

| − | |||

| − | + | {{Fixbox|description=Instructions|fix= | |

| − | {{Fixbox| | + | # Download the [https://github.com/Orbmu2k/nvidiaProfileInspector/releases latest build]. |

| − | + | # Save and extract it to an appropriate permanent location. | |

| − | # [https:// | ||

| − | # Save it to | ||

# Run <code>nvidiaProfileInspector.exe</code> to start the application. It may be worth making a shortcut and pinning it to your start menu or desktop. | # Run <code>nvidiaProfileInspector.exe</code> to start the application. It may be worth making a shortcut and pinning it to your start menu or desktop. | ||

| + | |||

| + | '''Status indicators''' | ||

| + | * '''White cog''' - Global default / Unchanged. | ||

| + | * '''Grey cog''' - Global override through the base <code>_GLOBAL_DRIVER_PROFILE</code> profile. | ||

| + | * '''Blue cog''' - Profile-specific user-defined override. | ||

| + | * '''Green cog''' - Profile-specific Nvidia predefined override. | ||

}} | }} | ||

| − | + | ===First steps for tweaking driver settings=== | |

| − | '' | + | {{Fixbox|description=Select (or create if missing) the display driver profile for the game|fix= |

| − | + | # Download and run the latest version of the Profile Inspector, see [[#Installation|Installation]]. | |

| − | + | # Using the '''Profiles''' list on top of the window, select the game-specific profile if one exists. If one does not exist, create a new one: | |

| − | < | + | ## Click on the '''Create new profile''' icon (the sun). |

| − | + | ## Give the profile a relevant name. The name of the game is often used. | |

| + | ## Click on the '''Add application to current profile''' icon (a small window with an icon of binoculars with a "+" above it). | ||

| + | ## Navigate to {{p|game}} and select the game executable and click '''Open'''. | ||

| + | ##* If you get a message that {{file|Filename.exe}} already belongs to a profile, verify that the application does not exist in the '''Profiles''' list again, then re-select the executable again with the file format in the selection dialog set to <code>Application Absolute Path (*.exe)</code> instead of the default <code>Application EXE Name (*.exe)</code>. | ||

| + | # Tweak the desired settings. | ||

| + | # Finally click on '''Apply changes''' to save the changes. | ||

| + | }} | ||

| + | ==Video== | ||

| + | ===[[Glossary:Anisotropic filtering (AF)|Anisotropic filtering (AF)]]=== | ||

| + | {{Image|Nvidia Profile Inspector Anisotropic Filtering Override.png|Example of a global override.}} | ||

| − | + | {{ii}} [http://images.nvidia.com/geforce-com/international/comparisons/just-cause-3/just-cause-3-nvidia-control-panel-anisotropic-filtering-interactive-comparison-001-on-vs-off-rev.html This is an example] of why it may be relevant to force high texture filtering globally as some developers' own solutions might not be as high quality as they could be. | |

| − | + | {{--}} There is a risk of negative side effects occurring in some games as a result of a forced global driver-level override, with unexpected consequences ranging from textures looking wrong to some post-processing shaders being affected if they rely of a texture that have an unintended level of filtering applied to it. If Nvidia becomes aware of a game that has a conflict with driver forced texture filtering, they may set a special flag on the game profile for texture filtering that disables driver overrides for that game, such as with [[Far Cry 3]] and [[Doom (2016)]], though this is not a guarantee as proper in-depth compatibility testing is impossible to perform for all games.<ref>{{Refcheck|user=Aemony|date=2023-09-30|comment=An anisotropic filtering (AF) driver override is too widespread and have both subtle and obvious consequences that it is impossible to say for sure how "safe" it is to enforce. Many users are also not aware of its potential impact on textures and non-texture alike to the degree that a user can mistake a visual bug caused by an AF override as being either intentional or incompetency by the developers, or a minor visual glitch that the developers have not come around to fixing yet, and so users often end up dismissing the visual glitches, never realizing it is of their own creation.}}</ref> | |

| − | + | {{mm}} A quicker way of doing this is to use [[Nvidia Control Panel]] and set '''Anisotropic filtering''' to <code>16x</code> and '''Texture filtering - Quality''' to <code>High quality</code> under '''Manage 3D settings''' > '''Global Settings'''. | |

| − | + | ||

| − | < | + | {{Fixbox|description=Force highest available texture filtering settings system-wide|fix= |

| − | == | + | # Download and run the latest version of the Profile Inspector, see [[#Installation|Installation]]. |

| − | * ''' | + | # Select the <code>_GLOBAL_DRIVER_PROFILE (Base Profile)</code> profile. |

| − | ''' | + | # Configure the relevant settings under '''Texture Filtering''' as shown below ([[:File:Nvidia_Profile_Inspector_Anisotropic_Filtering_Override.png|or in this image]]): |

| − | + | #* '''Anisotropic filtering mode''' to {{Code|User-defined / Off}} | |

| − | + | #* '''Anisotropic filtering setting''' to {{Code|16x}} | |

| − | + | #* '''Texture filtering - Anisotropic filter optimization''' to {{Code|Off}} | |

| − | + | #* '''Texture filtering - Anisotropic sample optimization''' to {{Code|Off}} | |

| − | ''' | + | #* '''Texture filtering - Driver Controlled LOD Bias''' to {{Code|On}} |

| − | + | #* '''Texture filtering - Negative LOD bias''' to {{Code|Clamp}} | |

| − | + | #** Setting '''Texture filtering - Negative LOD bias''' to {{Code|Clamp}} can lower the quality of DLSS and other [[Glossary:Anti-aliasing_(AA)#Reconstruction_methods|Reconstruction Methods]] that rely on a negative LOD bias.{{cn}} | |

| − | + | #* '''Texture filtering - Quality''' to {{Code|High quality}} | |

| − | + | #* '''Texture filtering - Trilinear optimization''' to {{Code|Off}} | |

| − | + | # Finally click on '''Apply changes''' to save the changes. | |

| − | + | }} | |

| + | |||

| + | ===Ambient occlusion (AO)=== | ||

| + | {{Fixbox|description=Force HBAO+ for a select game|fix= | ||

| + | # Download and run the latest version of the Profile Inspector, see [[#Installation|Installation]]. | ||

| + | # Select the game profile, or [[#First steps for tweaking driver settings|create one]] if one does not exist. | ||

| + | # Change '''Ambient Occlusion compatibility''' value to the appropriate [[List of HBAO+ compatibility flags for Nvidia|compatibility flag]]. | ||

| + | # Change '''Ambient Occlusion setting''' to {{Code|Performance}} or {{Code|Quality}}. | ||

| + | #* In some cases, the "Performance" ambient occlusion setting may yield a stronger effect compared to the "Quality" setting while also providing better performance. | ||

| + | # Change '''Ambient Occlusion usage''' to {{Code|Enabled}}. | ||

| + | # Press '''Apply changes''' in the top-right corner. | ||

| + | }} | ||

| + | |||

| + | ===Layer Vulkan/OpenGL on DXGI swapchain=== | ||

| + | {{ii}} Layering Vulkan and OpenGL games on a DXGI swapchain allows for improved multitasking and using [[Glossary:High dynamic range (HDR)|Auto HDR]] on Windows 11.<ref>{{Refurl|url=https://www.reddit.com/r/nvidia/comments/yf6hiw/psa_you_can_now_elevate_openglvulkan_games_to_a/|title=Reddit - /r/nvidia - PSA - You can now elevate OpenGL/Vulkan games to a DXGI Swapchain on today's drivers (526.47)|date=2023-01-16}}</ref> | ||

| + | |||

| + | {{Fixbox|description=Instructions:|ref=<ref>{{Refcheck|user=Aemony|date=2023-01-16|comment=}}</ref>|fix= | ||

| + | # Launch [[Nvidia Control Panel]] and navigate to '''Manage 3D settings'''. | ||

| + | # Locate the '''Vulkan/OpengL present method''' (typically at the bottom). | ||

| + | # Change it to {{Code|Prefer layered on DXGI Swapchain}}. | ||

| + | # Click on '''Apply''' to save the changes. | ||

| + | # Launch '''Nvidia Profile Inspector''' | ||

| + | # Enable the '''Show unknown settings from NVIDIA predefined profiles''' option in the far right of the toolbar on top (the magnifying glass). | ||

| + | # Scroll down to section '''8 - Extra''' and locate the '''OGL_DX_PRESENT_DEBUG''' setting. | ||

| + | # Change it to {{Code|0x00080001}} by double clicking on the value field and copy/pasting the value. | ||

| + | #* This value will enable the DXGI swapchain layer in most scenarios, including [[DXVK]]. | ||

| + | # Press '''Apply changes''' in the top-right corner. | ||

| + | }} | ||

| + | |||

| + | ==Misc tweaks== | ||

| + | ===Prevent Ansel from being injected/enabled for a specific game=== | ||

| + | {{ii}} Using Nvidia Profile Inspector, is becomes possible to disable Ansel on a per-game basis if desired. | ||

| + | {{mm}} The display drivers loads Ansel related DLL files into all running applications they interface with by default, even if the application does not support or can make use of Ansel. This can cause issues for third-party tools or applications. | ||

| + | |||

| + | {{Fixbox|description=Disable Ansel in the display driver profile for a specific game|fix= | ||

| + | # Download and run the latest version of the Profile Inspector, see [[#Installation|Installation]]. | ||

| + | # Using the '''Profiles''' list on top of the window, select the game-specific profile if one exists. If one does not exist, create a new one: | ||

| + | ## Click on the '''Create new profile''' icon (the sun). | ||

| + | ## Give the profile a relevant name. The name of the game is often used. | ||

| + | ## Click on the '''Add application to current profile''' icon (a small window with an icon of binoculars with a "+" above it). | ||

| + | ## Navigate to {{p|game}} and select the game executable and click '''Open'''. | ||

| + | # Scroll down to the '''Other''' section and configure '''Enable Ansel''' to <code>0x00000000 ANSEL_ENABLE_OFF</code>. | ||

| + | #* Setting may instead appear under '''Other''' section as '''NVIDIA Predefined Ansel Usage'''; set to <code>0x00000000 ANSEL_ALLOW_DISALLOWED</code>. | ||

| + | # Finally click on '''Apply changes''' to save the changes. | ||

| + | }} | ||

| + | |||

| + | ==Settings== | ||

| + | {{ii}} Keep changes to the base <code>_GLOBAL_DRIVER_PROFILE</code> profile limited, as overrides there are applied to all games and applications. | ||

| − | |||

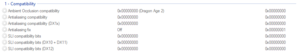

===Compatibility=== | ===Compatibility=== | ||

| − | + | {{Image|Nvidia Profile Inspector Compatibility.png|Compatibility settings}} | |

| − | + | {{ii}} Compatibility parameters that allows ambient occlusion, anti-aliasing, and SLI to be used for games, if given an appropriate value. | |

| − | + | {{mm}} [[List of HBAO+ compatibility flags for Nvidia|HBAO+ Compatibility Flags]] | |

| − | + | {{mm}} [[List of anti-aliasing compatibility flags for Nvidia|Anti-Aliasing Compatibility Flags]] | |

| − | + | {{mm}} [https://www.forum-3dcenter.org/vbulletin/showthread.php?t=509912 SLI Compatibility Flags] (German) | |

| − | + | ||

| − | + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | |

| − | + | |- | |

| − | + | ! style="min-width: 150px" | Parameter !! Description | |

| − | + | |- | |

| − | + | | '''Ambient Occlusion compatibility''' || Allows HBAO+ to work with any given game. | |

| − | + | |- | |

| − | + | | '''Antialiasing compatibility''' || Allows various forms of [[Glossary:Anti-aliasing (AA)|anti-aliasing]] to work with any given DirectX 9 game. | |

| − | + | |- | |

| − | + | | '''Antialiasing compatibility (DX1x)''' || Allows various forms of [[Glossary:Anti-aliasing (AA)|anti-aliasing]] to work with DirectX 10/11/12 games. Often restricted to MSAA and generally sees little use due to its limited capability. | |

| − | + | |- | |

| + | | '''Antialiasing Fix''' || Avoid black edges in some games while using MSAA.<ref>{{Refsnip|url=http://www.geeks3d.com/forums/index.php?topic=3159.0|title=Geeks3D Forums - Nvidia Inspector 1.9.7.2 Beta|date=2018-08-29|snippet=added FERMI_SETREDUCECOLORTHRESHOLDSENABLE as "Antialiasing fix" to avoid black edges in some games while using MSAA (R320.14+)}}</ref> Related to Team Fortress 2 and a few other games as well, where it is enabled by default. | ||

| + | |- | ||

| + | | '''SLI compatibility bits''' || Allows SLI to work in any given DirectX 9 game. | ||

| + | |- | ||

| + | | '''SLI compatibility bits (DX10+DX11)''' || Allows SLI to work in any given DirectX 10/11 game. | ||

| + | |- | ||

| + | | '''SLI compatibility bits (DX12)''' || Allows SLI to work in any given DirectX 12 game. | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | ===Sync and Refresh=== | ||

| + | {{Image|Nvidia Profile Inspector Sync and Refresh.png|Sync and Refresh settings}} | ||

| + | {{Image|Nvidia Profile Inspector G-Sync Indicator.png|The built-in G-Sync status indicator overlay}} | ||

| + | {{mm}} See [[Glossary:Frame rate (FPS)|Frame rate (FPS)]] for relevant information. | ||

| − | + | {{--}} The built-in frame rate limiter of the Nvidia display drivers introduces 2+ frames of delay, while [[Glossary:Frame rate (FPS)#Frame rate capping|RTSS]] (and possibly other alternatives) only introduces 1 frame of delay.<ref>{{Refsnip|url=https://www.blurbusters.com/gsync/gsync101-input-lag-tests-and-settings/11/|title=Blur Busters - G-SYNC 101: In-game vs. External FPS Limiters|date=|snippet=Needless to say, even if an in-game framerate limiter isn’t available, RTSS only introduces up to 1 frame of delay, which is still preferable to the 2+ frame delay added by Nvidia’s limiter with G-SYNC enabled, and a far superior alternative to the 2-6 frame delay added by uncapped G-SYNC.}}</ref> | |

| − | + | {{mm}} [https://www.anandtech.com/show/2794/2 AnandTech - Triple Buffering: Why We Love It] - Recommended reading about triple buffering/Fast Sync. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | |

| − | + | |- | |

| − | + | ! style="min-width: 150px" | Parameter !! Description | |

| − | + | |- | |

| − | + | | '''Flip Indicator''' || Enables an on-screen display of frames presented using flip model. | |

| + | |- | ||

| + | | '''Frame Rate Limiter''' || Enables the built-in [[Glossary:Frame rate (FPS)#Frame rate capping|frame rate limiter]] of the display drivers at the specified FPS. | ||

| + | |- | ||

| + | | '''Frame Rate Limiter Mode''' || Controls what mode of the frame rate limiter will be used. | ||

| + | |- | ||

| + | | '''GSYNC - Application Mode''' || Controls whether the G-Sync feature will be active in fullscreen only, or in windowed mode as well. | ||

| + | |- | ||

| + | | '''GSYNC - Application Requested State''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''GSYNC - Application State''' || style="text-align: left" | Selects the technique used to control the refresh policy of an attached monitor. | ||

| − | + | * '''Allow''' enables the use of G-Sync, and synchronizes monitor refresh rate to GPUs render target. | |

| − | + | * '''Force off''' and '''Disallow''' disables the use of G-Sync. | |

| − | *''' | + | * '''Fixed Refresh Rate''' is the traditional fixed refresh rate monitor technology. |

| − | + | * '''Ultra Low Motion Blur''' (ULMB) uses back light pulsing at a fixed refresh rate to minimize blur. | |

| − | |||

| − | |||

| − | *''' | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | *''' | ||

| − | |||

| − | |||

| − | *''' | ||

| − | |||

| − | + | It is highly recommended to change this using the '''[[Nvidia Control Panel]] > Manage 3D Settings > Monitor Technology''' instead to properly configured this and related parameters. | |

| − | + | |- | |

| − | + | | '''GSYNC - Global Feature''' || Toggles the global G-Sync functionality. It is recommended to change this using the '''[[Nvidia Control Panel]] > Set up G-SYNC''' instead to properly configured this and related parameters. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

|- | |- | ||

| − | | | + | | '''GSYNC - Global Mode''' || Controls whether the G-Sync feature will be active globally in fullscreen only, or in windowed mode as well. |

|- | |- | ||

| − | | | + | | '''GSYNC - Indicator Overlay''' || Enables a semi-transparent on-screen status indicator of when G-Sync is being used. If G-Sync is not being used, the indicator is not shown at all. |

|- | |- | ||

| − | | | + | | '''Maximum pre-rendered frames''' || Controls how many frames the CPU can prepare ahead of the frame currently being drawn by the GPU. Increasing this can result in smoother gameplay at lower frame rates, at the cost of additional input delay.<ref>{{Refsnip|url=https://forums.geforce.com/default/topic/476977/sli/maximum-pre-rendered-frames-for-sli/post/3405124/#3405124|title=Maximum Pre-Rendered frames for SLI|date=2016-10-12|snippet=The 'maximum pre-rendered frames' function operates within the DirectX API and serves to explicitly define the maximum number of frames the CPU can process ahead of the frame currently being drawn by the graphics subsystem. This is a dynamic, time-dependent queue that reacts to performance: at low frame rates, a higher value can produce more consistent gameplay, whereas a low value is less likely to introduce input latencies incurred by the extended storage time (of higher values) within the cache/memory. Its influence on performance is usually barely measurable, but its primary intent is to control peripheral latency.}}</ref> This setting does not apply to SLI configurations. |

| + | |||

| + | * Default is '''Use the 3D application setting''', and it is recommended not to go above '''3'''. Values of 1 and 2 will help reduce input latency in exchange for greater CPU usage.<ref>{{Refurl|url=https://displaylag.com/reduce-input-lag-in-pc-games-the-definitive-guide/|title=DisplayLag - Reduce Input Lag in PC Games: The Definitive Guide|date=2018-08-29}}</ref> | ||

| + | * When Vsync is set to <code>1/2 Refresh Rate</code>, a value of 1 is essentially required due to the introduced input latency.{{cn|date=October 2016}} | ||

|- | |- | ||

| − | | | + | | '''Preferred Refresh Rate''' || Controls the refresh rate override of the display drivers for games running in exclusive fullscreen mode. This also dictates the upper limit of the G-Sync range, as G-Sync will go inactive and the screen will start to tear at frame rates above the configured refresh rate, or V-Sync will kick in and sync the frame rate to the refresh rate if enabled. |

| + | |||

| + | * '''Highest available''' - Overrides the refresh rate of the exclusive fullscreen game to whatever is the highest available on the monitor. This setting is automatically used when G-Sync is enabled. Note that this override might not work for all games, in which case an alternative such as [[Special K]] might be needed. | ||

| + | * '''Use the 3D application setting / Application-controlled''' - Uses the refresh rate as requested by the application. If using G-Sync, frame rates above the requested refresh rate will result in screen tearing as G-Sync will go inactive, or V-Sync synchronizing the frame rate to the refresh rate if enabled. | ||

| + | {{ii}} This setting is only exposed in [[Nvidia Control Panel]] for monitors supporting refresh rates of at least 100 Hz. | ||

| + | {{ii}} This setting is only effective in DX9/10/11 applications. On Windows 10 (and above) - [[Windows#Fullscreen_optimizations|Fullscreen optimizations]] must also be disabled. | ||

|- | |- | ||

| − | | | + | | '''Triple buffering''' || Toggles triple buffering in '''OpenGL''' games. Enabling this improves performance when vertical sync is also turned on. |

|- | |- | ||

| − | | | + | | '''Vertical Sync''' || Controls vertical sync, or enables '''Fast Sync'''. Fast-Sync is essentially triple buffering for DirectX games, and is only applicable to frame rates above the refresh rate when regular V-Sync is disabled. |

| + | |- | ||

| + | | '''Vertical Sync Smooth AFR behavior''' || Toggles V-Sync smoothing behavior when V-Sync is enabled and SLI is active. | ||

| + | |||

| + | '''Official description:'''<ref>{{Refurl|url=https://nvidia.custhelp.com/app/answers/detail/a_id/3283/~/what-is-smooth-vsync|title=Nvidia - What is Smooth Vsync?|date=2018-08-29}}</ref> | ||

| + | |||

| + | Smooth Vsync is a new technology that can reduce stutter when Vsync is enabled and SLI is active. | ||

| + | |||

| + | When SLI is active and natural frame rates of games are below the refresh rate of your monitor, traditional Vsync forces frame rates to quickly oscillate between the refresh rate and half the refresh rate (for example, between 60 Hz and 30 Hz). This variation is often perceived as stutter. Smooth Vsync improves this by locking into the sustainable frame rate of your game and only increasing the frame rate if the game performance moves sustainably above the refresh rate of your monitor. This does lower the average framerate of your game, but the experience in many cases is far better. | ||

| + | |- | ||

| + | | '''Vertical Sync Tear Control''' || Toggles '''''Adaptive'' V-Sync''' when vertical sync is enabled. Not available when G-Sync is enabled. | ||

| + | |||

| + | '''Official description:'''<ref>{{Refurl|url=https://www.geforce.com/hardware/technology/adaptive-vsync|title=Nvidia - Adaptive VSync|date=2018-08-29}}</ref> | ||

| + | |||

| + | Nothing is more distracting than frame rate stuttering and screen tearing. The first tends to occur when frame rates are low, the second when frame rates are high. Adaptive VSync is a smarter way to render frames using Nvidia Control Panel software. At high framerates, VSync is enabled to eliminate tearing. At low frame rates, it's disabled to minimize stuttering. | ||

|- | |- | ||

| − | |||

|} | |} | ||

| − | + | ||

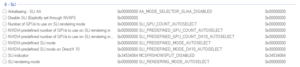

===Antialiasing=== | ===Antialiasing=== | ||

| − | + | {{Image|Nvidia Profile Inspector Antialiasing.png|Anti-aliasing settings}} | |

| − | + | {{mm}} See [[Glossary:Anti-aliasing (AA)|Anti-aliasing (AA)]] for relevant information. | |

| − | + | {{mm}} [[List of anti-aliasing compatibility flags for Nvidia|Anti-Aliasing Compatibility Flags]] | |

| − | + | ||

| − | + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | |

| − | |||

| − | |||

| − | |||

| − | {| class=" | ||

|- | |- | ||

| − | | | + | ! style="min-width: 150px" | Parameter !! Description |

|- | |- | ||

| − | | Override Application Setting | + | | '''Antialiasing - Behavior Flags''' || These mostly exist as a method of governing usage of AA from Nvidia Control Panel, though they also affect Inspector as well. |

| + | {{ii}} Make sure you are clearing out any flags in this field for a game profile when forcing AA as it will interfere and cause it not to work if you aren't careful. | ||

| + | |- | ||

| + | | '''Antialiasing - Gamma Correction''' || Gamma correction for MSAA. | ||

| + | {{ii}} Introduced with [[Half-Life 2]] in 2004. | ||

| + | {{ii}} Defaults to <code>ON</code> starting with Fermi GPUs.<ref>{{Refurl|url=http://www.anandtech.com/show/2116/12|title=NVIDIA's GeForce 8800 (G80): GPUs Re-architected for DirectX 10 - What's Gamma Correct AA?|date=2016-10-12}}</ref> | ||

| + | {{ii}} Should not be set to <code>OFF</code> on modern hardware.{{cn}} | ||

| + | |- | ||

| + | | '''Antialiasing - Line gamma''' || Applies gamma correction for singular lines (like wires) as compared to edges.<ref>{{Refurl|url=https://forums.geforce.com/default/topic/873686/geforce-drivers/what-does-quot-line-gamma-quot-do-for-anti-aliasing-/post/4659571/#4659571|title=GeForce Forums - What does "Line Gamma" do for Anti-Aliasing?|date=2018-08-30}}</ref> | ||

| + | |- | ||

| + | | '''Antialiasing - Mode''' || Determines in what mode the anti-aliasing override of the display driver should function in for an application: | ||

| + | |||

| + | * '''Application Controlled''' - The application itself controls anti-aliasing settings and techniques. The display driver does not override or enhance the anti-aliasing setting the application configures. | ||

| + | * '''Override Application Setting''' - The display drivers overrides the anti-aliasing setting of the application. This allows one to force anti-aliasing from the display driver. General rule of thumb is to disable any in-game anti-aliasing/MSAA when using this to avoid conflict. There are exceptions though; generally noted in the [[List of anti-aliasing compatibility flags for Nvidia|Anti-Aliasing Compatibility Flags]] document. | ||

| + | * '''Enhance Application Setting''' - Enhances the anti-aliasing of a game (e.g. enhance in-game MSAA with TrSSAA), which can provide higher quality and greater reliability for applications with built-in support for anti-aliasing. You must set any anti-aliasing level within the application for this mode to work with the '''Antialiasing - Setting''' override. | ||

| + | |||

| + | {{ii}} Use <code>Override Application Setting</code> if the application does not have built-in anti-aliasing settings or if the application does not support anti-aliasing when [[Glossary:High dynamic range (HDR)#HDR rendering|HDR rendering]] is enabled. When dealing with modern games, you will most likely want to use this option and not any of the other two. | ||

| + | {{ii}} <code>Enhance Application Setting</code> is entirely dependent on the implementation of MSAA in the application itself. This is can be hit or miss in modern DirectX 10+ games; more often than not either it does not work at all, breaks something, or looks very bad. See [[#Enhance application setting|Enhance application setting]] for more information. | ||

| + | {{ii}} DirectX 10+ games ignore this flag and always treat it as {{code|Enhance Application Setting}}.<ref>{{Refcheck|user=SirYodaJedi|date=2019-09-10|comment=}}</ref> | ||

| + | |- | ||

| + | | '''Antialiasing - Setting''' || {{mm}} [https://community.pcgamingwiki.com/topic/1858-aa-af-vsync-and-forcing-through-gpu/?p=8482 Explanation of MSAA methods] | ||

| + | This is where the specific method of forced/enhanced MSAA or [[FSAA|OGSSAA]] is set. | ||

| + | |- | ||

| + | | '''Antialiasing - Transparency Multisampling''' || {{mm}} [http://http.download.nvidia.com/developer/SDK/Individual_Samples/DEMOS/Direct3D9/src/AntiAliasingWithTransparency/docs/AntiAliasingWithTransparency.pdf Technical explanation] | ||

| + | {{mm}} [https://web.archive.org/web/20160506025723/http://screenshotcomparison.com/comparison/149642 Interactive example] | ||

| + | {{ii}} This enables and disables the use of transparency multisampling. | ||

| + | |- | ||

| + | | '''Antialiasing - Transparency Supersampling''' || | ||

| + | {{mm}} [https://community.pcgamingwiki.com/topic/1858-aa-af-vsync-and-forcing-through-gpu/?p=8482 Further Reference] | ||

| + | {{ii}} This sets whether <code>Supersampling</code> and <code>Sparse Grid Supersampling</code> is used. | ||

| + | |- | ||

| + | | '''Enable Maxwell sample interleaving (MFAA)''' || | ||

| + | {{mm}}[http://www.geforce.com/whats-new/articles/multi-frame-sampled-anti-aliasing-delivers-better-performance-and-superior-image-quality Introduction to MFAA] | ||

| + | This enables Nvidia's Multi Frame Anti Aliasing mode. This only works in DXGI (DX10+) and requires either the game to have MSAA enabled in the game or MSAA to be forced. | ||

| + | What it does is change the sub sample grid pattern every frame and then is reconstructed in motion with a "Temporal Synthesis Filter" as Nvidia calls it.<br> | ||

| + | There are some caveats to using this though.<br> | ||

| + | {{ii}} It is not compatible with SGSSAA as far as shown in limited testing.{{cn|date=October 2016}} | ||

| + | {{ii}} Depending on the game, MFAA causes visible flickering on geometric edges and other temporal artifacts. Part of this is nullified with downsampling.{{cn|date=October 2016}} | ||

| + | {{ii}} It has a minimum framerate requirement of about 40FPS. Otherwise MFAA will degrade the image. | ||

| + | {{--}} Visible sawtooth patterns with screenshots and videos captured locally.{{cn|date=October 2016}} | ||

| + | {{--}} Incompatible with SLI.{{cn|date=October 2016}} | ||

| + | |||

| + | |- | ||

| + | | '''Nvidia Predefined FXAA Usage''' || Controls whether Nvidia's FXAA implementation is allowed to be enabled for an application through [[Nvidia Control Panel]] (primarily) or Nvidia Profile Inspector. | ||

| + | |- | ||

| + | | '''Toggle FXAA indicator on or off''' || This will display a small green icon in the left upper corner if enabled showing whether FXAA is enabled or not. | ||

| + | |- | ||

| + | | '''Toggle FXAA on or off''' || FXAA is a fast shader-based post-processing technique that can be applied to any application, including those which do not support other forms of hardware-based antialiasing. FXAA can also be used in conjunction with other antialiasing settings to improve overall image quality. | ||

| + | |||

| + | * Turn FXAA <code>On</code> to improve image quality with a lesser performance impact than other antialiasing settings. | ||

| + | * Turn FXAA <code>Off</code> if you notice artifacts or dithering around the edges of objects, particularly around text. | ||

| + | |||

| + | {{ii}} Enabling this setting globally may affect all programs rendered on the GPU, including video players and the Windows desktop. | ||

|- | |- | ||

| − | |||

|} | |} | ||

| − | |||

| − | |||

| − | |||

| − | + | ====Enhance application setting==== | |

| − | + | {{ii}} When overriding the in-game MSAA fails, you can instead replace (enhance) the in-game MSAA with the Nvidia MSAA technique of your choice. | |

| − | + | {{ii}} These usually require anti-aliasing compatibility flags to work properly in DirectX 9 applications; see the [[List of anti-aliasing compatibility flags for Nvidia|List of anti-aliasing compatibility flags]]. | |

| − | + | {{mm}} The level of supersampling must match a corresponding level of MSAA. For example, if 4x MSAA is used, then 4x Sparse Grid Supersampling must be used. | |

| + | {{mm}} In OpenGL <code>Supersampling</code> is equivalent to <code>Sparse Grid Supersampling</code>. Ergo, 4xMSAA+4xTrSSAA will give you 4xFSSGSSAA in OpenGL games that work. | ||

| − | + | '''Supersampling''' | |

| − | + | {{++}} Inexpensive | |

| − | '' | + | {{++}} Does not require MSAA to work |

| − | + | {{--}} Only works on alpha-tested surfaces | |

| − | + | '''Full-Screen Sparse Grid Supersamping''' | |

| − | + | {{++}} Extremely high-quality supersampling | |

| − | + | {{++}} Works on the entire image | |

| + | {{--}} Requires MSAA to work | ||

| + | {{--}} Very expensive | ||

| − | + | ===Texture Filtering=== | |

| − | + | {{Image|Nvidia Profile Inspector Texture Filtering.png|Texture filtering settings}} | |

| − | + | {{mm}} See [[Glossary:Anisotropic filtering (AF)|Anisotropic filtering (AF)]] for relevant information. | |

| − | + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | |

| − | + | |- | |

| − | + | ! style="min-width: 150px" | Parameter !! Description | |

| − | + | |- | |

| − | + | | '''Anisotropic filtering mode''' || Toggles the built-in anisotropic filtering override of the display drivers. | |

| − | + | ||

| − | [ | + | {{ii}} <code>16x</code> results in the best quality. |

| − | + | {{ii}} It is possible to add a global override that forces anisotropic filtering on all games. This can solve texture filtering issues that would otherwise exist in games that have mediocre texture filtering. See [[#Why force Anisotropic Filtering (AF)?|Why force Anisotropic Filtering (AF)?]] for details. | |

| − | + | |- | |

| − | + | | '''Anisotropic filtering setting''' || Controls the level of texture filtering the driver override applies, if '''Anisotropic filtering mode''' is set to <code>User-defined / Off</code>. | |

| − | + | ||

| − | + | {{ii}} Modern GPUs can perform 4x anisotropic filtering at no performance penalty.<ref>{{Refcheck|user=Aemony|date=2018-08-30|comment=This is a comment by the modder Kaldaien in his Special K mod for Ys 8, which among other things included an anisotropic filtering override. The comment is shown while hovering the mouse over the anisotropic filtering override.}}</ref> | |

| − | + | |- | |

| − | + | | '''Prevent anisotropic filtering''' || Similar to AA Behavior Flags, if this is set to <code>On</code> it will ignore user-defined driver overrides. Some games default to this, such as [[Quake 4]], [[RAGE]], [[Doom (2016)]], [[Far Cry 3]], and [[Far Cry 3: Blood Dragon]]. | |

| − | + | |- | |

| − | This | + | | '''Texture filtering - Anisotropic filter optimization''' || Anisotropic filter optimization improves performance by limiting trilinear filtering to the primary texture stage where the general appearance and color of objects is determined. Bilinear filtering is used for all other stages, such as those used for lighting, shadows, and other effects. This setting only affects DirectX programs. |

| − | + | ||

| − | + | * Select <code>On</code> for higher performance with a minimal loss in image quality | |

| − | '' | + | * Select <code>Off</code>if you see shimmering on objects |

| − | + | ||

| − | + | |- | |

| − | + | | '''Texture Filtering - Anisotropic sample optimization''' || Anisotropic sample optimization limits the number of anisotropic samples used based on texel size. This setting only affects DirectX programs. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | *''' | + | * Select <code>On</code> for higher performance with a minimal loss in image quality |

| − | + | * Select <code>Off</code> if you see shimmering on objects | |

| − | + | |- | |

| − | + | | '''Texture Filtering - Driver Controlled LOD Bias''' || When using SGSSAA with this enabled will allow the driver to compute its own negative LOD bias for textures to help improve sharpness (at cost of potential for more aliasing) for those who prefer it. It's generally less than the fixed amounts that are recommended.{{cn|date=October 2016}} | |

| − | |||

| − | |||

| − | |||

| − | + | {{ii}} Manual LOD bias will be ignored when this is enabled. | |

| + | |- | ||

| + | | '''Texture Filtering - LOD Bias (DX)''' || {{mm}} [https://web.archive.org/web/20160514011908/http://screenshotcomparison.com/comparison/159382 Interactive example] | ||

| + | {{mm}} [https://web.archive.org/web/20181019132341/https://naturalviolence.webs.com/lodbias.htm Explanation of LOD bias] | ||

| + | The level of detail bias setting for textures in DirectX applications. This normally only works under 2 circumstances, both of which requires '''Driver Controlled LoD Bias''' set to <code>Off</code>. | ||

| − | + | # When ''' Antialiasing - Mode''' is set to <code>Override Application Setting</code> or <code>Enhance Application Setting</code>, and an appropriate '''Antialiasing - Setting''' is selected. | |

| − | + | # If you leave the '''Antialiasing - Mode''' setting to <code>Application Controlled</code> or <code>Enhance Application Setting</code> but you set the anti-aliasing and transparency setting to SGSSAA (e.g. 4xMSAA and 4xSGSSAA; TrSSAA in OpenGL) then you can freely set the LOD bias and the changes will work without forcing anti-aliasing. This has the side effect that in some games if the game has MSAA, it will act as if you were "enhancing" the game setting even if using <code>Application Controlled</code>. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | '''Notes:''' | |

| − | + | {{ii}} If you wish to use a negative LOD bias when forcing SGSSAA, these are the recommended amounts: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | If you wish to use a | ||

#2xSGSSAA (2 samples): -0.5 | #2xSGSSAA (2 samples): -0.5 | ||

#4xSGSSAA (4 samples): -1.0 | #4xSGSSAA (4 samples): -1.0 | ||

| − | #8xSGSSAA (8 samples): -1.5 | + | #8xSGSSAA (8 samples): -1.5 |

| − | Do not use a | + | {{ii}} Do not use a negative LOD bias when using OGSSAA and HSAA as they have their own LOD bias.<ref>{{Refurl|url=https://web.archive.org/web/20180716171211/https://naturalviolence.webs.com/sgssaa.htm|title=Natural Violence - SGSSAA (archived 16 July 2018)|date=2016-10-12}}</ref> |

| − | + | |- | |

| − | + | | '''Texture Filtering - LOD Bias (OGL)''' || Identical to '''Texture Filtering - LOD Bias (DX)''' but applies to OpenGL applications instead. | |

| − | + | |- | |

| − | This | + | | '''Texture Filtering - Negative LOD bias''' || Some applications use negative LOD bias to sharpen texture filtering. This sharpens the stationary image but introduces aliasing ("shimmering") when the scene is in motion. This setting controls whether negative LOD bias are clamped (not allowed) or allowed. |

| − | + | {{ii}} Has no effect on Kepler GPU's and beyond unless using Nvidia SSAA (SG, OG or Hybrid); can be worked around by setting '''Antialiasing - Transparency Supersampling''' to <code>AA_MODE_REPLAY_MODE_ALL</code>.<ref>{{Refurl|url=https://forums.guru3d.com/threads/negative-lod-bias.394493/page-2#post-4958052|title=Negative LOD bias - Post #22|date=9 June 2023}}</ref><ref>{{Refurl|url=https://www.nvidia.com/en-us/geforce/forums/discover/149215/clamp-negative-lod-bias/#4174240|title=Clamp negative LOD bias|date=May 2023}}</ref> | |

| − | + | |- | |

| − | + | | '''Texture Filtering - Quality''' || This setting allows you to decide if you would prefer performance, quality, or a balance between the two. If you change this using the [[Nvidia Control Panel]], the control panel will make all of the appropriate texture filtering adjustments based on the selected preference. | |

| − | + | ||

| − | + | {{ii}} Should be set to or left at <code>High Quality</code> if not using a GeForce 8 series GPU or earlier.{{cn|August 2018}} | |

| + | |- | ||

| + | | '''Texture Filtering - Trilinear Optimization''' || Trilinear optimization improves texture filtering performance by allowing bilinear filtering on textures in parts of the scene where trilinear filtering is not necessary. This setting only affects DirectX applications. | ||

| + | |||

| + | {{ii}} Disabled if '''Texture Filtering - Quality''' is set to <code>High Quality</code>. | ||

| + | |- | ||

| + | |} | ||

| + | |||

===Common=== | ===Common=== | ||

| − | + | {{Image|Nvidia Profile Inspector Common.png|Common settings}} | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | "Shaders are used in almost every game, adding numerous visual effects that can greatly improve image quality (you can see the dramatic impact of shader technology in Watch Dogs here). Generally, shaders are compiled during loading screens, or during gameplay in seamless world titles, such as Assassin's Creed IV, Batman: Arkham Origins, and the aforementioned Watch Dogs. During loads their compilation increases the time it takes to start playing, and during gameplay increases CPU usage, lowering frame rates. When the shader is no longer required, or the game is closed, it is | + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" |

| + | |- | ||

| + | ! style="min-width: 150px" | Parameter !! Description | ||

| + | |- | ||

| + | | '''Ambient Occlusion setting''' || This setting determines what mode of ambient occlusion is to be used when forced in games with no built-in support for it: | ||

| + | |||

| + | * '''Performance''' | ||

| + | * '''Quality''' | ||

| + | * '''High Quality''' | ||

| + | {{ii}} <code>Quality</code> and <code>High Quality</code> are nearly identical, while <code>Performance</code> noticeably lowers the resolution and precision of the effect in many games with less-accurate and stronger shading.{{cn|October 2016}} | ||

| + | {{ii}} Certain games need <code>Performance</code> to support forced ambient occlusion, such as [[Oddworld: New 'n' Tasty!]]{{cn|October 2016}} | ||

| + | |- | ||

| + | | '''Ambient Occlusion usage''' || Set this to <code>Enabled</code> when using forced ambient occlusion. | ||

| + | |- | ||

| + | | '''CUDA - Force P2 State''' || If set to OFF it forces GPU into P0 State for compute. | ||

| + | |- | ||

| + | | '''Extension limit''' || Extension limit indicates whether the driver extension string has been trimmed for compatibility with particular applications. Some older applications cannot process long extension strings and will crash if extensions are unlimited. | ||

| + | |||

| + | * If you are using an older OpenGL application, turning this option on may prevent crashing | ||

| + | * If you are using a newer OpenGL application, you should turn this option off. | ||

| + | |- | ||

| + | | '''Multi-display/mixed-GPU acceleration''' || This controls GPU-based acceleration in OpenGL applications and will have no effect on performance on DirectX platforms.<ref>{{Refurl|url=https://forums.geforce.com/default/topic/449234/sli/mulit-display-mixed-gpu-acceleration-how-to-use-/post/3191385/#3191385|title=GeForce Forums - Mulit Display/Mixed GPU Acceleration How to use?|date=2018-08-30}}</ref> | ||

| + | |- | ||

| + | | '''OpenGL - Version Override''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Optimize for Compute Performance''' || This setting is intended to provide additional performance to non-gaming applications that use large CUDA address spaces and large amounts of GPU memory when run on graphics cards based on second-generation Maxwell GPUs. Graphics cards based on other architectures do not utilize this setting.<ref>{{Refurl|url=https://nvidia.custhelp.com/app/answers/detail/a_id/4370/~/what-does-the-setting-optimize-for-compute-performance-do%3F#:~:text=Updated%2001%2F25%2F2017%2011,on%20second%2Dgeneration%20Maxwell%20GPUs.|title=What does the setting "Optimize for Compute Performance" do?|date=2017-01-25}}</ref> | ||

| + | |- | ||

| + | | '''Power management mode''' || This allows you to set a preference for the performance level of the graphics card when running 3D applications. It is recommended to configure this on a per-game basis using game-specific profiles, to prevent unnecessary power draw while not gaming. | ||

| + | |||

| + | * '''Adaptive''' | ||

| + | {{ii}} Automatically determines whether to use a lower clock speed for games and apps. | ||

| + | {{++}} Works well when not playing games. | ||

| + | {{--}} Older Nvidia GPU Boost GPUs tend to incorrectly use a lower clock speed.{{cn|date=October 2016}} | ||

| + | {{mm}} Setting power management mode from <code>Adaptive</code> to <code>Maximum Performance</code> can improve performance in certain applications when the GPU is throttling the clock speeds incorrectly.<ref>https://nvidia.custhelp.com/app/answers/detail/a_id/3130/~/setting-power-management-mode-from-adaptive-to-maximum-performance</ref> | ||

| + | |||

| + | * '''Optimal power''' | ||

| + | {{ii}} Same as '''Adaptive''', but will not re-render frames when the GPU workload is idle; instead it will just display the previous frame. | ||

| + | {{mm}} The default power management mode on modern graphics cards. | ||

| + | |||

| + | * '''Prefer maximum performance''' | ||

| + | {{ii}} Prevents the GPU from switching to a lower clock speed for games and apps. | ||

| + | {{++}} Works well when playing games. | ||

| + | {{--}} Wasteful when not using GPU-intensive applications or games. | ||

| + | |- | ||

| + | | '''Shader cache''' || Enables or disables the shader cache, which saves compiled shaders to your hard drive to improve game performance.<ref>{{Refsnip|url=http://www.geforce.com/whats-new/articles/nvidia-geforce-337-88-whql-watch-dog-drivers|title=GeForce 337.88 WHQL, Game Ready Watch Dogs Drivers Increase Frame Rates By Up To 75%|date=2016-10-12|snippet=Shaders are used in almost every game, adding numerous visual effects that can greatly improve image quality (you can see the dramatic impact of shader technology in Watch Dogs here). Generally, shaders are compiled during loading screens, or during gameplay in seamless world titles, such as Assassin's Creed IV, Batman: Arkham Origins, and the aforementioned Watch Dogs. During loads their compilation increases the time it takes to start playing, and during gameplay increases CPU usage, lowering frame rates. When the shader is no longer required, or the game is closed, it is discarded, forcing its recompilation the next time you play.<br> | ||

In today's 337.88 WHQL drivers we've introduced a new NVIDIA Control Panel feature called "Shader Cache", which saves compiled shaders to a cache on your hard drive. Following the compilation and saving of the shader, the shader can simply be recalled from the hard disk the next time it is required, potentially reducing load times and CPU usage to optimize and improve your experience.<br> | In today's 337.88 WHQL drivers we've introduced a new NVIDIA Control Panel feature called "Shader Cache", which saves compiled shaders to a cache on your hard drive. Following the compilation and saving of the shader, the shader can simply be recalled from the hard disk the next time it is required, potentially reducing load times and CPU usage to optimize and improve your experience.<br> | ||

| − | By default the Shader Cache is enabled for all games, and saves up to 256MB of compiled shaders in %USERPROFILE%\AppData\Local\Temp\NVIDIA Corporation\NV_Cache. This location can be changed by moving your entire Temp folder using Windows Control Panel > System > System Properties > Advanced > Environmental Variables > Temp, or by using a Junction Point to relocate the NV_Cache folder. To change the use state of Shader Cache on a per-game basis simply locate the option in the NVIDIA Control Panel, as shown below. | + | By default the Shader Cache is enabled for all games, and saves up to 256MB of compiled shaders in %USERPROFILE%\AppData\Local\Temp\NVIDIA Corporation\NV_Cache. This location can be changed by moving your entire Temp folder using Windows Control Panel > System > System Properties > Advanced > Environmental Variables > Temp, or by using a Junction Point to relocate the NV_Cache folder. To change the use state of Shader Cache on a per-game basis simply locate the option in the NVIDIA Control Panel, as shown below.}}</ref> |

| − | + | ||

| − | + | {{mm}}Added in driver 337.88 | |

| − | '' | + | |- |

| − | + | | '''Show PhysX Visual Indicator''' || Enables an on-screen status indicator of when PhysX is being used. If PhysX is not being used, the indicator is not shown at all. | |

| − | + | |- | |

| − | + | | '''Threaded optimization''' || Allows '''OpenGL''' applications to take advantage of multiple CPUs. | |

| − | + | ||

| − | '' | + | {{ii}} Most newer applications should benefit from the <code>Auto</code> or <code>On</code> options. |

| − | + | {{ii}} This setting should be turned off for most older applications. | |

| − | + | |- | |

| − | + | |} | |

| − | + | ||

===SLI=== | ===SLI=== | ||

| − | + | {{Image|Nvidia Profile Inspector SLI.png|SLI settings}} | |

| − | + | ||

| − | In SLI8x mode for example, each GPU would then do 4xMSAA after which the final result becomes 4xMSAA+4xMSAA=8xMSAA.<br> | + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" |

| − | This can be useful in games without proper SLI support, so at least the second GPU is not just idling.<br> | + | |- |

| − | However it unfortunately only works correctly in OpenGL, and there will be no difference in temporal behavior between for example normal forced 4xMSAA+4xSGSSAA and SLI8x+8xSGSSAA in DX9. | + | ! style="min-width: 150px" | Parameter !! Description |

| − | + | |- | |

| − | + | | '''Antialiasing - SLI AA''' || This determines whether a Nvidia SLI setup splits the MSAA workload between the available GPUs.<ref>{{Refsnip|url=http://www.nvidia.com/object/slizone_sliAA_howto1.html|title=NVIDIA - SLI Antialiasing|date=2016-10-12|snippet=SLI AA essentially disables normal AFR rendering, and in 2-way mode will use the primary GPU for rendering + forced AA, while the secondary GPU is only used to do AA work.<br><br>In SLI8x mode for example, each GPU would then do 4xMSAA after which the final result becomes 4xMSAA+4xMSAA=8xMSAA.<br><br>This can be useful in games without proper SLI support, so at least the second GPU is not just idling.<br><br>However it unfortunately only works correctly in OpenGL, and there will be no difference in temporal behavior between for example normal forced 4xMSAA+4xSGSSAA and SLI8x+8xSGSSAA in DX9.}}</ref> | |

| − | + | |- | |

| − | + | | '''Disable SLI (Explicitly set through NVAPI)''' || If you know what this setting does, please add a description here. | |

| − | + | |- | |

| − | + | | '''Number of GPUs to use on SLI rendering mode''' || Forces all rendering modes to utilize either one, two, three or four gpu's for rendering. | |

| − | + | |- | |

| − | *''' | + | | '''NVIDIA predefined number of GPUs to use on SLI rendering mode''' || Forces DX9 and OpenGl to utilize either one, two, three or four gpu's for rendering. |

| + | |- | ||

| + | | '''NVIDIA predefined number of GPUs to use on SLI rendering mode on DX10''' || Forces DX10, DX11 and DX12 to utilize either one, two, three or four gpu's for rendering. This can effect total bandwidth not using four but using four rather than 2 can cause artifacting in certain games. | ||

| + | |- | ||

| + | | '''NVIDIA predefined SLI mode''' || Forces DX9 and OpenGl to render using AFR, AFR 2, SFR (CFR) or Single Gpu Mode with Sli Enabled in Nvidia Control Panel. | ||

| + | |- | ||

| + | | '''NVIDIA predefined SLI mode on DX10''' || Forces DX10, DX11 and DX12 to render using AFR, AFR 2, SFR (CFR) or Single Gpu Mode with Sli Enabled in Nvidia Control Panel. Almost all games require AFR in DX11. Nvlink with turing allows for CFR to be used in several games where AFR is not even usable. | ||

| + | |- | ||

| + | | '''SLI indicator''' || Enables a semi-transparent on-screen status indicator of when SLI is being used and how the workload is split between the cards. | ||

| + | |- | ||

| + | | '''SLI rendering mode''' || Forces Base sli rendering mode AFR, AFR 2, SFR (CFR) or Single Gpu Mode with Sli Enabled in Nvidia Control Panel (Good for quick switching to test fps). Using this setting will override compatibility settings set in the sli section, this is why I call it Base Sli. | ||

| + | |- | ||

| + | |} | ||

| + | |||

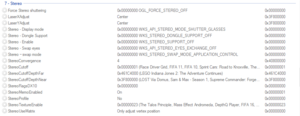

| + | ===Stereo=== | ||

| + | {{Image|Nvidia Profile Inspector Stereo.png|Stereo settings}} | ||

| + | {{ii}} Lists stereoscopic 3D settings related to Nvidia 3D Vision. More info can be found on [http://wiki.bo3b.net/index.php?title=Driver_Profile_Settings Bo3b's School for Shaderhackers]. | ||

| + | |||

| + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | ||

| + | |- | ||

| + | ! style="min-width: 150px" | Parameter !! Description | ||

| + | |- | ||

| + | | '''Force Stereo shuttering''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''LaserXAdjust''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''LaserYAdjust''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Stereo - Display mode''' || This setting allows you to choose the appropriate display mode for shutter glasses, stereo displays, and other hardware. Refer to the hardware documentation to determine which mode to use. | ||

| + | |- | ||

| + | | '''Stereo - Dongle Support''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Stereo - Enable''' || Enables stereo in OpenGL applications. | ||

| + | |- | ||

| + | | '''Stereo - Swap eyes''' || Select this option to exchange the left and right images. | ||

| + | |- | ||

| + | | '''Stereo - Swap mode''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoConvergence''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoCutoff''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoCutoffDepthFar''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoCutoffDepthNear''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoFlagsDX10''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoMemoEnabled''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoProfile''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoTextureEnable''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''StereoUseMatrix''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | |} | ||

| + | |||

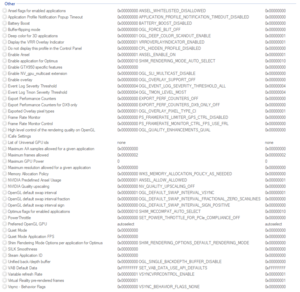

| + | ===Other=== | ||

| + | {{Image|Nvidia Profile Inspector Other.png|Other settings}} | ||

| + | {{ii}} Lists settings which do not match another category. | ||

| + | |||

| + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | ||

| + | |- | ||

| + | ! style="min-width: 150px" | Parameter !! Description | ||

| + | |- | ||

| + | | '''Ansel flags for enabled applications''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Application Profile Notification Popup Timeout''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Battery Boost''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Buffer-flipping mode''' || Determines how the video buffer is copied to the screen. This setting only affects OpenGL and Vulkan programs. | ||

| + | * '''Auto-select''' - When possible - driver coverts fullscreen-sized windows into exclusive fullscreen. | ||

| + | * '''Use block transfer''' - Disables any driver conversions, all apps will work in legacy windowed mode. | ||

| + | {{ii}} Has no effect when '''Vulkan/OpengL present method''' set to {{Code|Prefer layered on DXGI Swapchain}}. | ||

| + | |- | ||

| + | | '''Deep color for 3D applications''' || Toggles 30-bit (10 bpc) deep color on compatible monitors on Quadro cards. Affects only OpenGL (windowed and fullscreen) and Direct3D 10 (fullscreen mode only). See [https://www.nvidia.com/docs/IO/40049/TB-04701-001_v02_new.pdf this technical brief] for further information. | ||

| + | |- | ||

| + | | '''Display the VRR Overlay Indicator''' || Draws a G-Sync logo when an application engages G-Sync. | ||

| + | |- | ||

| + | | '''Do not display this profile in the Control Panel''' || Hides the profile from being listed in [[Nvidia Control Panel]] > '''Manage 3D Settings''' > '''Program Settings'''. | ||

| + | |- | ||

| + | | '''Enable Ansel''' || Toggles Ansel (NvCamera) for the application. This is enabled by default and results in the display drivers injecting the DLL files for Ansel in all applications. | ||

| + | |- | ||

| + | | '''Enable application for Optimus''' || On an [[Nvidia#Optimus|Optimus laptop]], controls whether the selected application runs on the dedicated Nvidia GPU or the integrated GPU. | ||

| + | |- | ||

| + | | '''Enable GTX950 specific features''' || Enables GTX 950 latency optimizations on E-Sports Titles (Dota 2, Heroes of the Storm, League of Legends) | ||

| + | |- | ||

| + | | '''Enable NV_gpu_multicast extension''' || This extension enables novel multi-GPU rendering techniques by providing application control over a group of linked GPUs with identical hardware configuration. This setting only affects OpenGL programs. | ||

| + | |- | ||

| + | | '''Enable overlay''' || Allows the use of OpenGL overlay planes in programs. Typically used if a program fails to render OpenGL planes. | ||

| + | |- | ||

| + | | '''Event Log Severity Threshold''' || If you know what this setting does, please add a description here. This setting only affects OpenGL programs. | ||

| + | |- | ||

| + | | '''Event Log Tmon Severity Threshold''' || If you know what this setting does, please add a description here. This setting only affects OpenGL programs. | ||

| + | |- | ||

| + | | '''Export Performance Counters''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Export Performance Counters for DX9 only''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Exported Overlay pixel types''' || Determines whether the driver supports RGB or color indexed OpenGL overlay plane formats. Typically used if a program fails to set the proper OpenGL overlay plane format. | ||

| + | |- | ||

| + | | '''Frame Rate Monitor''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Frame Rate Monitor Control''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''High level control of the rendering quality on OpenGL''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''ICafe Settings''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''List of Universal GPU ids''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Maximum AA samples allowed for a given application''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Maximum frames allowed''' || Redundant setting for "Maximum pre-rendered frames". If set from the Nvidia Control Panel, both this and "Maximum pre-rendered frames" will be updated. | ||

| + | |- | ||

| + | | '''Maximum GPU Power''' || If you know what this setting does, please add a description here. (Not available in version 1.9.7.3) | ||

| + | |- | ||

| + | | '''Maximum resolution alllowed for a given application''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Memory Allocation Policy''' || This defines how workstation feature resource allocation is performed. | ||

| + | * '''As Needed (default)''' - Resources for workstation features are allocated as needed resulting in the minimum amount of resource consumption. Feature activation or deactivation often causes mode-sets. | ||

| + | * '''Moderate pre-allocation''' - Resources for the first workstation feature activated are statically allocated at system boot and persist thereafter. This will use more GPU and system memory, but will prevent mode-sets when activating or deactivating a single feature. Invocation of additional workstation features will still cause mode-sets. | ||

| + | * '''Aggressive pre-allocation''' - Resources for all workstation features are statically allocated at system boot and persist thereafter. This will use the most GPU and system memory, but will prevent mode-sets when activating or deactivating all workstation features. | ||

| + | |- | ||

| + | | '''NVIDIA Predefined Ansel Usage''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''NVIDIA Quality upscaling''' || Uses a lanczos upscaling filter instead of a bilinear one. The result is a less blurry fullscreen picture at less than native resolutions. | ||

| + | |- | ||

| + | | '''OpenGL default swap interval''' || Toggles Vertical Sync behaviour, but specifically for OpenGL applications. | ||

| + | * '''0x00000000 OGL_DEFAULT_SWAP_INTERVAL_APP_CONTROLLED''' - Let the application decide. | ||

| + | * '''0x00000001 OGL_DEFAULT_SWAP_INTERVAL_VSYNC''' - Follow the Vertical Sync setting used under the "[[#Sync_and_Refresh|Sync and Refresh]]" section | ||

| + | * '''0x10000000 OGL_DEFAULT_SWAP_INTERVAL_FORCE_ON''' - Force Vertical Sync on | ||

| + | * '''0xF0000000 OGL_DEFAULT_SWAP_INTERVAL_FORCE_OFF''' - Force Vertical Sync off | ||

| + | * '''0xFFFFFFFF OGL_DEFAULT_SWAP_INTERVAL_DISABLE''' - Same as FORCE_OFF | ||

| + | |- | ||

| + | | '''OpenGL default swap interval fraction''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''OpenGL default swap interval sign''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Optimus flags for enabled applications''' || Select which GPU is used when launching the application. | ||

| + | * '''0x00000000 SHIM_MCCOMPAT_INTEGRATED''' Use Integrated Graphics | ||

| + | * '''0x00000001 SHIM_MCCOMPAT_ENABLE''' Use DGPU [Nvidia Card] | ||

| + | * '''0x00000002 SHIM_MCCOMPAT_USER_EDITABLE''' Use Chooses GPU [Via Menu/Control Panel/Right Click Menu] | ||

| + | * '''0x00000008 SHIM_MCCOMPAT_VARYING_BIT''' If you know what this setting does, please add a description here. | ||

| + | * '''0x00000010 SHIM_MCCOMPAT_AUTO_SELECT''' Use Auto Default Option [Nvidia Control Panel Decided] | ||

| + | * '''0x80000000 SHIM_MCCOMPAT_OVERRIDE_BIT''' If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''PowerThrottle''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Preferred OpenGL GPU''' || Select the GPU to be used by OpenGL applications. | ||

| + | |- | ||

| + | | '''Quiet Mode''' || Quiet Mode/Whisper Mode, Select ON/OFF [Makes Laptop/GPU Run Quieter] | ||

| + | |- | ||

| + | | '''Quiet Mode Application FPS''' || When Quiet Mode Is Enabled, Set MAX FPS For Applications Using GPU in Quiet Mode | ||

| + | |- | ||

| + | | '''Shim Rendering Mode Options per application for Optimus''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''SILK Smoothness''' || Silk reduces stutters in games caused by variable CPU or GPU workloads by smoothing out animation and presentation cadence using animation prediction and post render smoothing buffer. | ||

| + | |||

| + | * '''Off''' – Silk is disabled. | ||

| + | * '''Low''' – Moderate smoothing is enabled and most microstutter is eliminated. | ||

| + | * '''Medium''' – Many stutters and hitches are removed in typical games. | ||

| + | * '''High''' – More smoothing is applied and may result in observable input lag. | ||

| + | * '''Ultra''' – Maximum smoothing is applied and most stutters and hitches in games are eliminated. Lag may be unacceptable in some games. | ||

| + | |||

| + | {{ii}} Selecting High or Ultra settings for silk can increase noticeable lag when playing, and may not be appropriate for first person shooters or competitive gaming. | ||

| + | |- | ||

| + | | '''Steam Application ID''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Unified back/depth buffer''' || This setting is allows the OpenGL driver to allocate one back buffer and one depth buffer at the same resolution of the display. When this setting is enabled, OpenGL applications that create multiple windows use video memory more efficiently and show improved performance. When this setting is off, the OpenGL driver allocates a back buffer and depth buffer for every window created by an OpenGL application<ref>https://www.manualslib.com/manual/557432/Nvidia-Quadro-Workstation.html?page=120</ref> | ||

| + | |- | ||

| + | | '''VAB Default Data''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Variable refresh Rate''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | | '''Virtual Reality pre-rendered frames''' || Limits the number of frames the CPU can prepare before the frames are processed by the GPU. Lower latency is preferable for virtual reality head sets. | ||

| + | |- | ||

| + | | '''Vsync - Behavior Flags''' || If you know what this setting does, please add a description here. | ||

| + | |- | ||

| + | |} | ||

| + | ===Unknown=== | ||

| + | {{Image|Nvidia Profile Inspector Unknown.png|Unknown settings}} | ||

| + | {{ii}} These are unknown settings or overrides that Nvidia Profile Inspector is not aware of what they determine or controls. | ||

| + | {{mm}} Most of these are game-specific overrides only applicable to a few games, or new flags that were introduced in newer versions of the display drivers. | ||

| + | {{--}} Not all unknown settings can be properly exported and imported at will. If a built-in profile using one of those have been removed, the recommendation is to reinstall the display drivers to ensure they are restored fully. | ||

| + | {| class="page-normaltable pcgwikitable" style="width:auto; min-width: 60%; max-width:900px; text-align: left;" | ||

| + | |- | ||

| + | ! style="min-width: 150px" | Parameter !! Description | ||

| + | |- | ||

| + | | '''0x00666634''' || When set to 1 (0x00000001), marks applications covered by the profile as a Silk Test App. This is required for the "SILK Smoothness" setting to take effect. | ||

| + | |- | ||

| + | ! style="min-width: 150px" | Parameter !! Description | ||

| + | |- | ||

| + | | '''0x10834FEE''' || When the parameter is set to 2 (0x0020000) the value modifies the speed of everything including the framerate that windows handles therefore it is better disabled because it renders ineffective the value of Nvidia framerate limiter v3 please refrain from modifying this value and leave it at the value 0x00000000 so that all applications work naturally | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | <br style="clear: both"> | ||

{{References}} | {{References}} | ||

| + | [[Category:Utility]] | ||

Latest revision as of 16:03, 11 February 2024

|

|

| Developers | |

|---|---|

| Orbmu2k | |

| Release dates | |

| Windows | June 20, 2010[1] |

Nvidia Profile Inspector (NPI) is an open source third-party tool created for pulling up and editing application profiles within the Nvidia display drivers. It works much like the Manage 3D settings page in the Nvidia Control Panel but goes more in-depth and exposes settings and offers functionality not available through the native control panel.

The tool was spun out of the original Nvidia Inspector which featured an overclocking utility and required the user to create a shortcut to launch the profile editor separately.

Nvidia Profile Inspector typically sees maintenance updates released every couple of months, keeping it aligned with changes introduced in newer versions of the drivers, although the project were seemingly on a hiatus between January 19, 2021 and November 13, 2022. An unofficial fork was released in April 2022 with improved support for newer drivers and now no longer available since an official update from the original author.

General information

- HBAO+ Compatibility Flags

- Anti-Aliasing Compatibility Flags

- SLI Compatibility Flags (German)

- Official repository

Related articles

Availability

| Source | DRM | Notes | Keys | OS |

|---|---|---|---|---|

| Official website |

Installation

| Instructions |

|---|

Status indicators

|

First steps for tweaking driver settings

| Select (or create if missing) the display driver profile for the game |

|---|

|

Video

Anisotropic filtering (AF)

- This is an example of why it may be relevant to force high texture filtering globally as some developers' own solutions might not be as high quality as they could be.

- There is a risk of negative side effects occurring in some games as a result of a forced global driver-level override, with unexpected consequences ranging from textures looking wrong to some post-processing shaders being affected if they rely of a texture that have an unintended level of filtering applied to it. If Nvidia becomes aware of a game that has a conflict with driver forced texture filtering, they may set a special flag on the game profile for texture filtering that disables driver overrides for that game, such as with Far Cry 3 and Doom (2016), though this is not a guarantee as proper in-depth compatibility testing is impossible to perform for all games.[2]

- A quicker way of doing this is to use Nvidia Control Panel and set Anisotropic filtering to

16xand Texture filtering - Quality toHigh qualityunder Manage 3D settings > Global Settings.

| Force highest available texture filtering settings system-wide |

|---|

|

Ambient occlusion (AO)

| Force HBAO+ for a select game |

|---|

|

Layer Vulkan/OpenGL on DXGI swapchain

- Layering Vulkan and OpenGL games on a DXGI swapchain allows for improved multitasking and using Auto HDR on Windows 11.[3]

| Instructions:[4] |

|---|

|

Misc tweaks

Prevent Ansel from being injected/enabled for a specific game

- Using Nvidia Profile Inspector, is becomes possible to disable Ansel on a per-game basis if desired.

- The display drivers loads Ansel related DLL files into all running applications they interface with by default, even if the application does not support or can make use of Ansel. This can cause issues for third-party tools or applications.

| Disable Ansel in the display driver profile for a specific game |

|---|

|

Settings

- Keep changes to the base

_GLOBAL_DRIVER_PROFILEprofile limited, as overrides there are applied to all games and applications.

Compatibility

- Compatibility parameters that allows ambient occlusion, anti-aliasing, and SLI to be used for games, if given an appropriate value.

- HBAO+ Compatibility Flags

- Anti-Aliasing Compatibility Flags

- SLI Compatibility Flags (German)

| Parameter | Description |

|---|---|

| Ambient Occlusion compatibility | Allows HBAO+ to work with any given game. |

| Antialiasing compatibility | Allows various forms of anti-aliasing to work with any given DirectX 9 game. |

| Antialiasing compatibility (DX1x) | Allows various forms of anti-aliasing to work with DirectX 10/11/12 games. Often restricted to MSAA and generally sees little use due to its limited capability. |

| Antialiasing Fix | Avoid black edges in some games while using MSAA.[5] Related to Team Fortress 2 and a few other games as well, where it is enabled by default. |

| SLI compatibility bits | Allows SLI to work in any given DirectX 9 game. |

| SLI compatibility bits (DX10+DX11) | Allows SLI to work in any given DirectX 10/11 game. |

| SLI compatibility bits (DX12) | Allows SLI to work in any given DirectX 12 game. |

Sync and Refresh

- See Frame rate (FPS) for relevant information.

- The built-in frame rate limiter of the Nvidia display drivers introduces 2+ frames of delay, while RTSS (and possibly other alternatives) only introduces 1 frame of delay.[6]

- AnandTech - Triple Buffering: Why We Love It - Recommended reading about triple buffering/Fast Sync.

| Parameter | Description |

|---|---|

| Flip Indicator | Enables an on-screen display of frames presented using flip model. |

| Frame Rate Limiter | Enables the built-in frame rate limiter of the display drivers at the specified FPS. |

| Frame Rate Limiter Mode | Controls what mode of the frame rate limiter will be used. |

| GSYNC - Application Mode | Controls whether the G-Sync feature will be active in fullscreen only, or in windowed mode as well. |

| GSYNC - Application Requested State | If you know what this setting does, please add a description here. |

| GSYNC - Application State | Selects the technique used to control the refresh policy of an attached monitor.

It is highly recommended to change this using the Nvidia Control Panel > Manage 3D Settings > Monitor Technology instead to properly configured this and related parameters. |